✓

2.6 million users worldwide

✓

Start free

✓

Since 2011

✓

Brainstorm together

More about the Software Testing Canvas

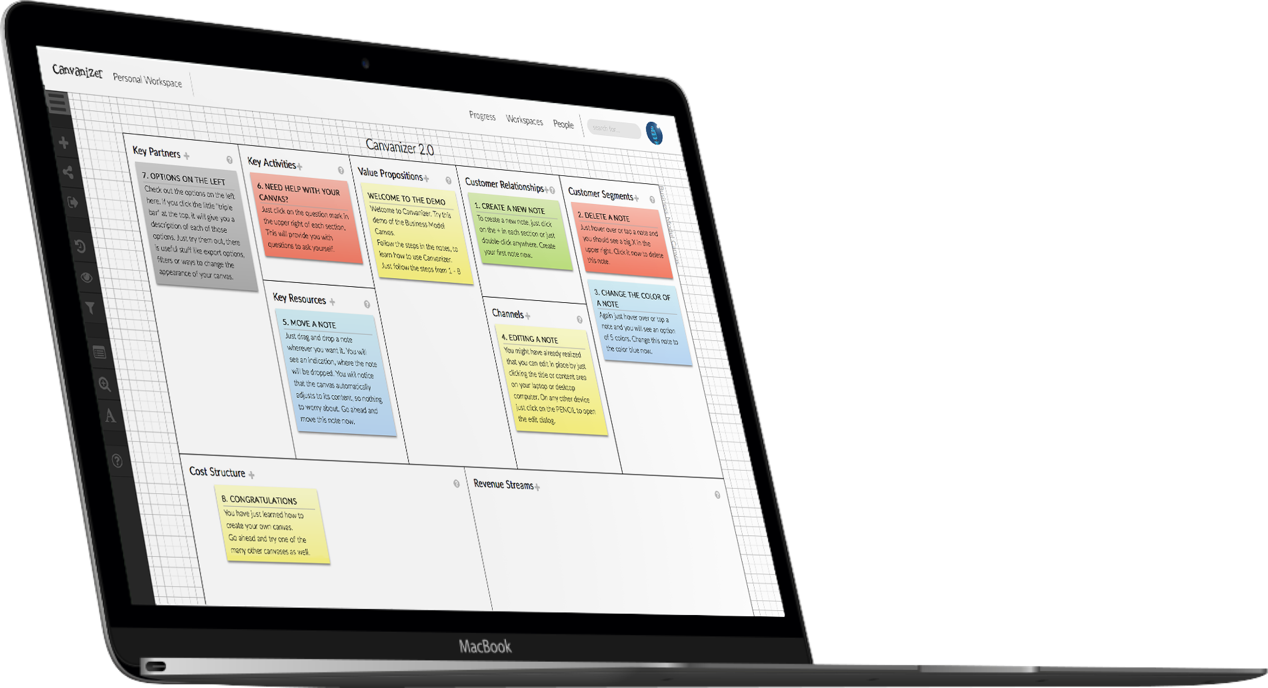

One of the ISTQB's Principles of Software Testing highlights the fact that software testing is 'context dependent'. In other words, a completely different strategy is required to test business applications, mobile apps and safety-critical software. A suitable testing strategy will also depend on whether a waterfall or agile development approach has been followed.

The Software Testing Canvas is a tool for developing a software testing strategy that is tailored to the context in which testing takes place. The canvas is populated by answering five fundamental questions that define a software testing strategy.

What will be tested?

This section of the canvas identifies the software components and software features that will be tested.Why perform this test ?

This section of the canvas identifies the objectives for performing the test. In her book 'Explore It!', Elisabeth Hendrickson identifies two overarching objectives for testing software: checking that software conforms to expectations; and exploring the risk of failures.How will the test cases be designed?

Another ISTQB principle warns that 'exhaustive testing is impossible'. In other words, testers can never totally eliminate the risk of software failures. This is why Elisabeth Hendrickson suggests that testers should 'explore failures', rather than attempting to 'check' that the software works as expected. It also means that potentially, there is an infinite number of test cases that could be executed.This section of the canvas identifies the 'basis' used to select a specific subset of test cases, from the infinite number of possibilities. It also identifies any 'test oracle' that may be used to determine the expected outcome of a test.

Who or what will perform the test?

This section of the canvas identifies the manual and automated agents that execute the test and compare the actual and expected outcomes.Manual agents are human testers who either: follow a test specification designed to confirm that the software meets expectations; or explore the software attempting to identify failures.

Automated agents are software components that can repeatedly execute a large number of test cases, by automating the execution of the test.

How will the tests be executed?

This section of the canvas identifies how the agent executing the test will connect to the software being tested, and the configuration of the software components involved in the test.Manual agents normally execute a test via the user interface. Automated agents may be included in source code, connect through an API or employ fixtures that simulate interaction with the user interface.

The software being tested, often requires software components, such web browsers or database servers, to be included in the test configuration. These components are not actually being tested but are required in order to execute the test. The test configuration must also include any automated test agents.